Lately I have been helping some people negotiate a novel use-case for digital identity: Online invigilation. This has turned into an interesting case-study in horseless-carriage (1) thinking.

The Scene.

University’s are being challenged by the internet, online learning and the rise of new providers like Udacity. Online courses can attract student numbers that traditional labour intensive teaching can’t handle. It’s too expensive to mark 100,000 assignments by hand. You need automated assessment. It is cheap and scales well. It can also be relatively easy to cheat.

That presents a real problem for universities. The reputation of the qualifications they provide depends on the rigour of their assessment processes. If it’s too easy to cheat, and too hard to catch the cheats, the results become meaningless – even if those results carry the imprimatur of Oxford or MIT. To compete in internet-scale education markets, universities need to use automated assessment. To retain the value of the qualifications they issue, universities need to prevent cheating. In many universities this has problem has been interpreted as a need for automated invigilation.

Place the eyeball in the hopper…

To those trying to stop online cheating (where it involves fraudulent identity), biometrics might seem like a no-brainer. We have all seen the palmprint and retina scanners on TV and in films. iPhones now come with built in fingerprint scanners. Surely this is now a useable technology with the potential to solve the online cheating problem? (2)

Unfortunately no. Online cheating has two characteristics that completely alter the nature of the problem,

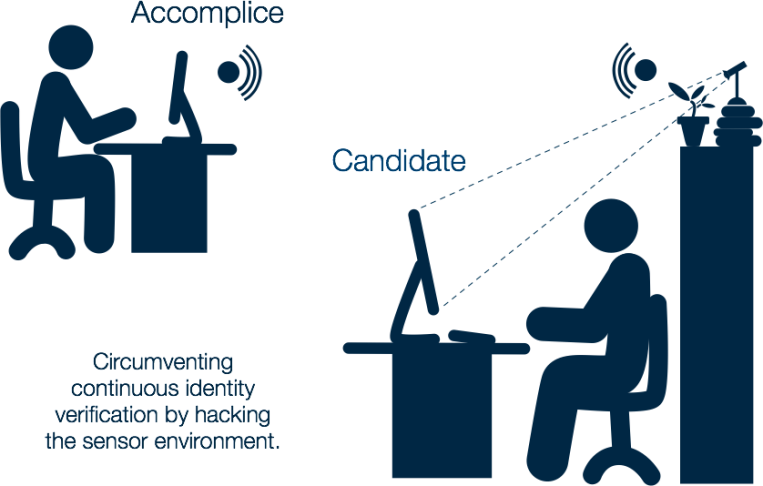

- The exam environment (and by inclusion the sensor environment) is uncontrolled.

- The fake candidate is an accomplice. They have the full cooperation of the genuine candidate.

The environment matters because the information environment extends beyond the security environment. The security environment stops with the hardware – the keyboard, mouse, screen, etc. But information from the assessment can be seen and heard by anyone in the room. It is possible to get the information without interacting with the system.

The complicity between the legitimate candidate and their accomplice means the accomplice has access to everything the candidates has, including their body.

Failing to understand these points leads to silly measures.

It is common for online assessment to use software that locks other applications on the candidate’s computer. This is to prevent them from keeping a search page or a PDF open during an assessment.

I have actually heard it suggested that a candidate could be required to periodically film the rest of the room to show no one was assisting off camera – tricky if you are using a 27inch iMac.

Of course continuous verification techniques, such as repeated sampling of the candidates face or analysis of their typing patterns during a session are reasonably commonplace in commercial offerings.

Complicity and an uncontrolled environment make it pretty easy to get around such measures…

Even if you figure out how to prevent direct-cable or over-the-network mirroring, for every possible end-point device, it doesn’t take a lot of imagination to hack the physical environment without touching the system at all…

These are just simple schemas. But the principle is sound.

The candidate can provide all the data, the requisite face, fingerprint, and typing kinesthetics. Every assessment can be filmed and keylogged in its entirety for reactive or statistical auditing. The system can work perfectly because the system itself was never subverted and was allowed to function as intended.

It doesn’t matter if you use physical or behavioural biometric identifiers, or some hybrid of normal multi-factor authentication and biometric verification.

Stereotypes are not just a race-thing

People don’t just stereotype other people. We stereotype everything – pets, furniture, TV shows, food. Patterns exert a huge influence over our minds because they are so incredibly useful.

We stereotype digital identity too.

The paradigmatic use-case for digital identity services is access control and theft prevention. In normal security, when identity theft (deception) occurs it is usually as a means to access to a resource. The function identity services perform to prevent fraudulent access is authentication.

Cheating on a test is not a case of fraudulent access, it is a case of deception. The function we need an identity service to perform to prevent deception is verification. (4)

I know of no current technology that can reliably verify where the knowledge is coming from in an online assessment – when the assessment environment is uncontrolled.

The idea that there must be a way to use technology to prevent online cheating persists – and some vendors stake their livelihoods on it. (3) And I have seen how easily people confuse verification and authentication. People who shouldn’t; highly tech-literate people with years of systems development experience.

This suggests to me that access control is the paradigmatic use-case for digital identity.

Access control has so stereotyped our thinking, and exerts a strong horseless-carriage effect, that it degrades our ability to think clearly through other use-cases for digital identity.

Notes:

(1) Horseless-carriage thinking is when our understanding of something new is coloured and limited by the thing it is replacing. When people first started to make automobiles they were understood in terms of the horses and carriages they were replacing. It took a while for them to becomes ‘cars’. I discuss the consequences of horseless carriage thinking in Moon Towers and Radiated Libraries.

(2) I am not going to go into the ins and outs of subverting biometric sensors – and the often gruesome measure depicted in film. In reality biometrics are not vulnerable to literal ‘hacking’ measure. Moreover, as this post discusses, the cheating use-case doesn’t require violent measures at all.

(3) There are of course other alternatives. One can rethink assessment methods. Multiple choice examinations and short answer assessments are simple to cheat. There may be different ways of reliably establishing a student’s competency that lend themselves to more reliable automation. Much the way the plagiarism detection software has amplified the ability of human examiners to detect that kind of cheating. We may need to use a resampling technique to discourage rather than prevent cheating. These questions, while interesting are an aside. My interest is in how such basic limitations on online assessment go unrecognised, and why a faith in the viability of automated solutions persist.

(4) In the hollywood movie scenarios of secret facilities protected by retinal scanners these use-cases overlap somewhat. But the imposter, like the password hacker, is still deceiving to gain access. The exam cheat is not deceiving to gain access. They have access. Access is the least of their problems. And as they are an accomplice to the legitimate candidate, anything we do to block the deceiver’s access would also restrict the candidate’s access.